The Integrated Circuit

(1961 - Present)

The Transistor is simply an on/off switch controlled by electricity.

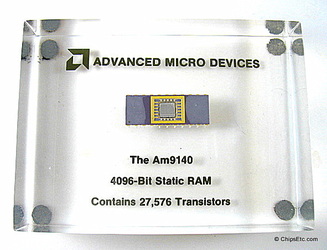

The Integrated Circuit combined numerous transistors onto a single chip.

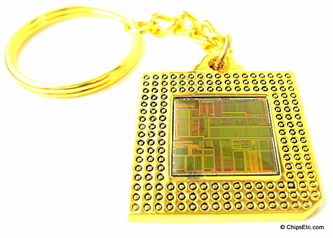

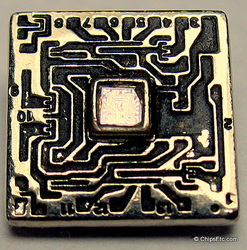

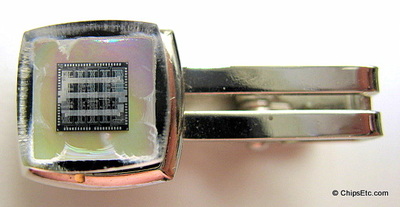

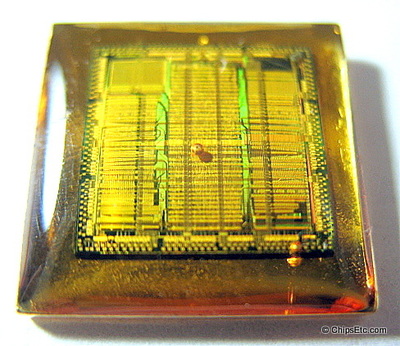

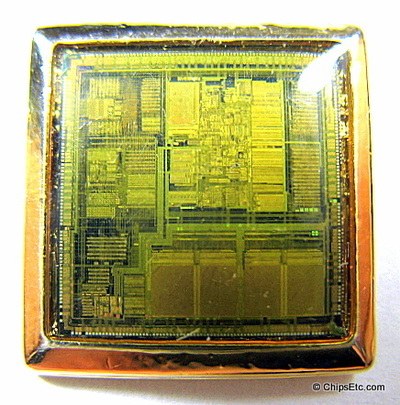

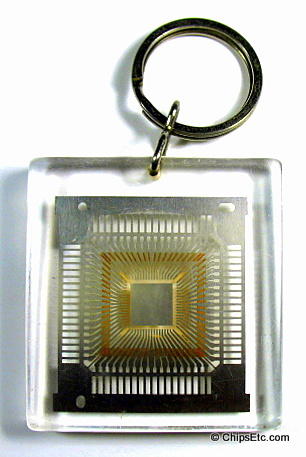

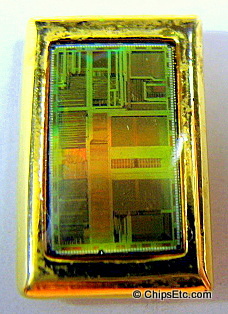

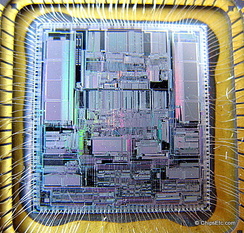

Motorola 68040 Microprocessor Close-up (1990)

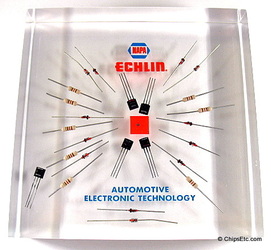

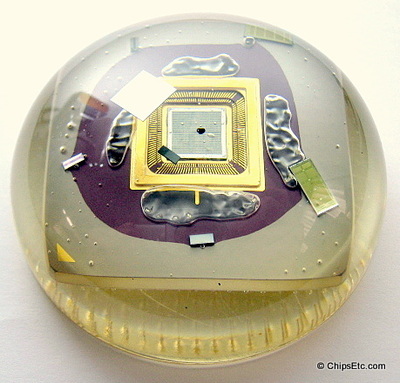

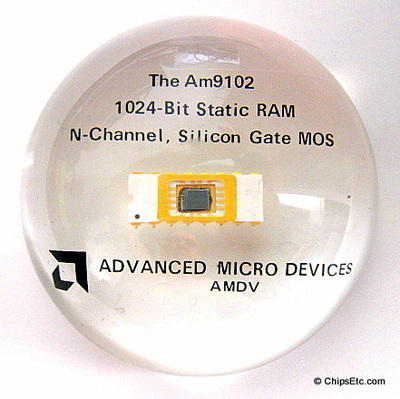

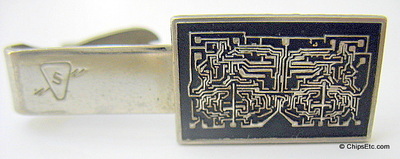

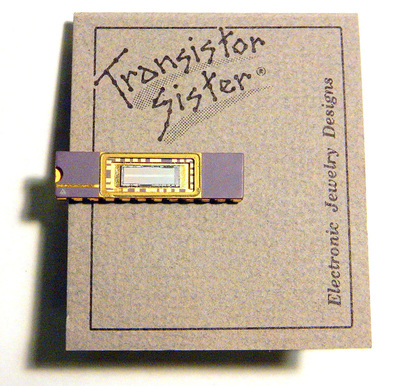

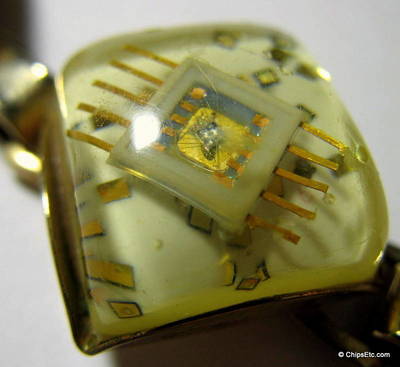

The Integrated Circuit (also known as IC, chip or microchip) placed the previously separated Transistors, resistors, capacitors and wiring circuitry onto a single chip made of semiconductor material (either Silicon or Germanium). The Integrated Circuit greatly shrunk the size and cost of making electronics and impacted the future designs of all computers and other electronics.

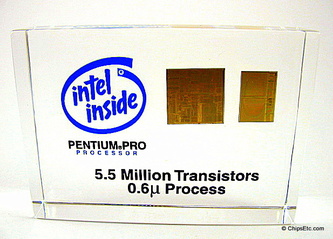

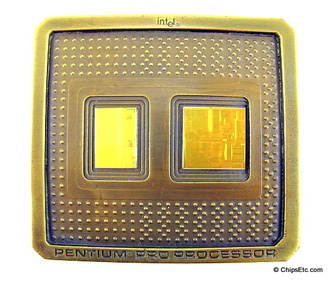

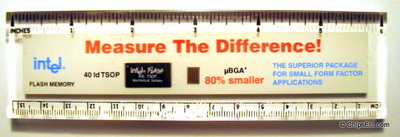

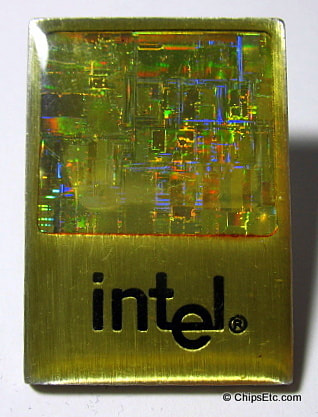

Computers today are dominated by two major microprocessor companies namely Intel and AMD, the latest offerings of microprocessors available from Intel for example contains billions of transistors and as a comparison the Intel 4004 chip from 1971 contained 2,300 transistors, the most common Pentium chip from Intel in 1993 contained 3,100,000 transistors.

Over the years the technology behind transistors has changed so that they could be made smaller and more could be fitted into microprocessors giving faster processors. The technology continues to make advances in miniaturization in manufacturing transistors and microprocessors.

In 1965 Gordon E. Moore, the co-founder of Intel, wrote a paper describing how the number of transistors in integrated circuits had doubled every year since the invention of the integrated circuit until 1965 and he predicted that the trend would continue for at least ten years. Forty Five years on and the prediction is still somewhat correct and is now commonly known as Moore's Law. In 2005 in an interview with Moore stated that the continuation of the trend cannot continue as transistors would eventually reach the limits of miniaturization at atomic levels. However experts still insist that Moore's Law will continue for at least another decade or two.

_

The History of the Integrated Circuit

The first successful demonstration of the microchip was on September 12, 1958 by Jack Kilby of Texas Instruments. The first commercially available integrated circuits were manufactured by Fairchild Semiconductor in 1961.

Better known to the layperson as a computer chip, IC or microchip, just about every electronic device you use today depends on the integrated circuit - from computers to smartphones, smart televisions, automobiles, household appliances, even traffic signals, the list is endless.

The development of the integrated circuit was a huge event in the history of computing. Without this technology, it's unlikely that personal computers and handheld computing devices would have been developed and produced as early as they were.

The Need for the Integrated Circuit

No doubt you are familiar with early computers, monstrosities whose CPU took up whole rooms and were largely owned by government labs and research universities. These early computers depended on vacuum tubes to amplify electrical signals throughout the machine. Vacuum tubes were large, made of glass, and required regular maintenance: not exactly something that could be put into a consumer device. To top it off, they made the electrical signal very slow, reducing these supercomputers to the equivalent of today's pocket calculator. The vacuum tube left much to be desired, and put inventors on a mission to find better ways to amplify electrical signals.

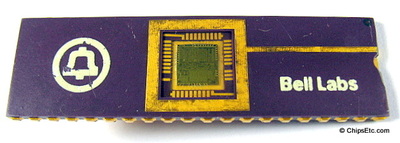

Transistors, which first came into use in the 1940's, had an obvious edge on vacuum tubes. They could make the current flow faster, thus promoting computing power, and they were also smaller. Their downside? Transistors had to be linked together in a circuit without any errors, or the computer would not function correctly. This still limited the complexity of computers that could be based on transistor circuits.

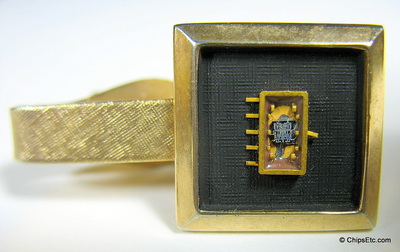

The invention of the integrated circuit goes to Jack Kilby, an and employee of Texas Instruments. In 1958, Kilby was tasked with developing an improved circuit for his employers products. However, the direction for the circuit that his boss wanted to follow was not that of the integrated circuit; Kilby waited until his co-workers were on vacation to pursue his idea of putting all elements of a circuit on one small chip. He finished his prototype, which he presented upon their return. The prototype was tested and it worked: Kilby's invention was deemed a success.

In 1959, Fairchild Semiconductor's Robert Noyce (who would later co-found Intel) was also credited as an initial inventor of the integrated circuit. Noyce made an important improvement upon Kilby's initial design. He made the alteration of adding a thin layer of metal to the chip, to better connect all the various components on the circuit.

Interestingly, an English inventor also described the initial idea for the integrated circuit, independent of Kilby and Noyce. The engineer, Geoffrey Drummer, envisaged a single circuit with all the components placed together on a layer of silicon material. In fact, Drummer is credited as the first to ever describe an integrated circuit, at a talk in Washington, D.C.; however, no British corporations or the military were interested in developing his idea.

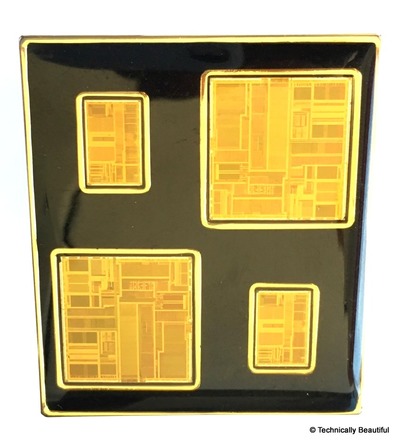

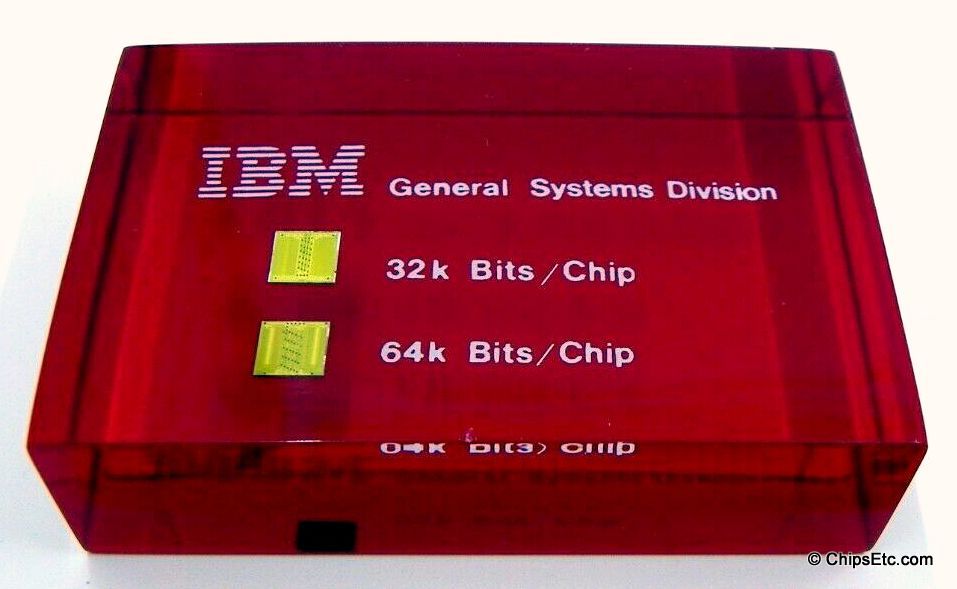

Generations of Integrated Circuits

1st - Small scale integration (SSI):

had 3 to 30 transistors/chip. Early 1960s

2nd - Medium scale integration (MSI):

had 30 to 300 transistors/chip. Md to Late 1960s

3rd - Large scale integration (LSI):

had 300 to 3,000 transistors/chip. Mid 1970s

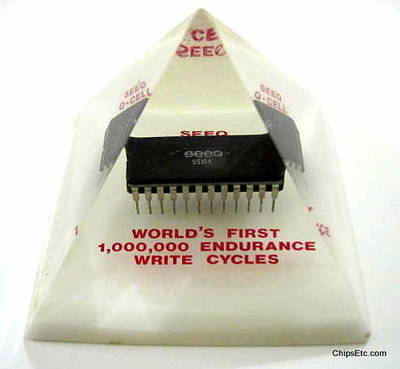

4th - Very large scale integration (VLSI):

had more than 3,000 transistors/chip. Early 1980s

5th - Ultra Large Scale integration (ULSI):

had more than one million transistors/chip. Mid 1980s

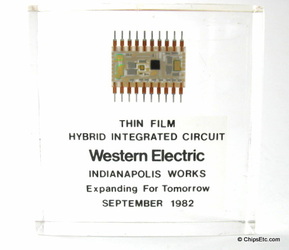

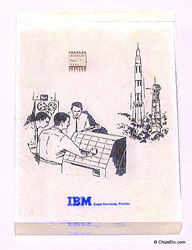

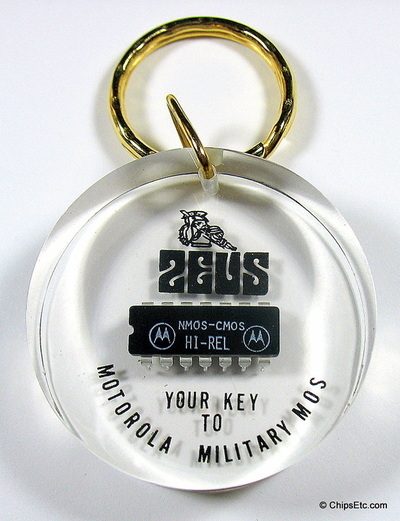

1st Generation Integrated Circuits in the 1960's were vital to early aerospace projects

The Minuteman missile and the Apollo program both needed lightweight digital computers for their inertial guidance systems. The Integrated Circuit technology development was led by the Apollo guidance computer, while the Minuteman missile bolstered it into mass-production.

The purchases of almost all of the available integrated circuits from 1960 through 1963 were from these aerospace programs, and basically provided the demand that funded the production improvements. In turn, this demand was responsible for lowering the production costs from $1000 per Integrated Circuit (in 1960 dollars) to a mere $25 per Integrated Circuit (in 1963 dollars).

Integrated Circuits began to be used in consumer products at the turn of the decade, for example in FM inter-carrier sound processing in television receivers.

The first commercial computers to use Integrated circuits were the Burroughs B2500 / B3500, introduced in 1968.